One-Stop Azure Cert Guide for a GCP Professional: Microsoft Azure Certification DP-200: Implementing an Azure Data Solution

As a Technical Architect at MediaAgility, I refined my approach to designing new customer solutions for large enterprises, and I got more inclined to learning, not just studying and memorizing.

Why I chose Azure Learning:

In the last two years, I designed GCP solutions for almost five enterprises and the common fact was, every enterprise was using/(willing to use) a hybrid cloud strategy regardless of their industry & service. And the compelling reason was no-vendor locking & utilize the best services across cloud providers and stay in the front line of their competition.

I am extensively working on GCP but at the same time taking care of Azure & AWS intersections. After completing My 6X Google Cloud Certification Journey, I decided to start with Azure to gain more in-depth knowledge.

DP-200 Journey:

Brief:

At the start of 2019, Microsoft released two Azure Data exams: Implementing an Azure Data Solution (DP-200) and Designing an Azure Data Solution (DP-201). If you pass both of these exams, you become a Microsoft Certified Azure Data Engineer Associate.

Steps:

- Reverse engineering Google Cloud for Azure professionals: This guide compares Google Cloud with Azure and highlights the similarities and differences between the two. In addition, the guide provides quick-reference mappings of Azure products, concepts, and terminology to the corresponding products, concepts, and terminology on Google Cloud.

- Gold content at Microsoft learning path: In my opinion, this is the best learning resource of all. The estimated total time for all of the following modules was around 30 hours. However, I ended up around 100 hours to cover hands-on experiments.

- Azure for the Data Engineer

- Store data in Azure

- Work with relational data in Azure

- Work with NoSQL data in Azure Cosmos DB

- Large Scale Data Processing with Azure Data Lake Storage Gen2

- Implement a Data Streaming Solution with Azure Streaming Analytics

- Implement a Data Warehouse with Azure Synapse Analytics

- Perform data engineering with Azure Databricks

- Extract knowledge and insights from your data with Azure Databricks

3. Notes: I took some useful notes in Evernote which I need to consolidate and share in a public Git Repo. If anyone is interested, then please write in the comments below or find me on LinkedIn.

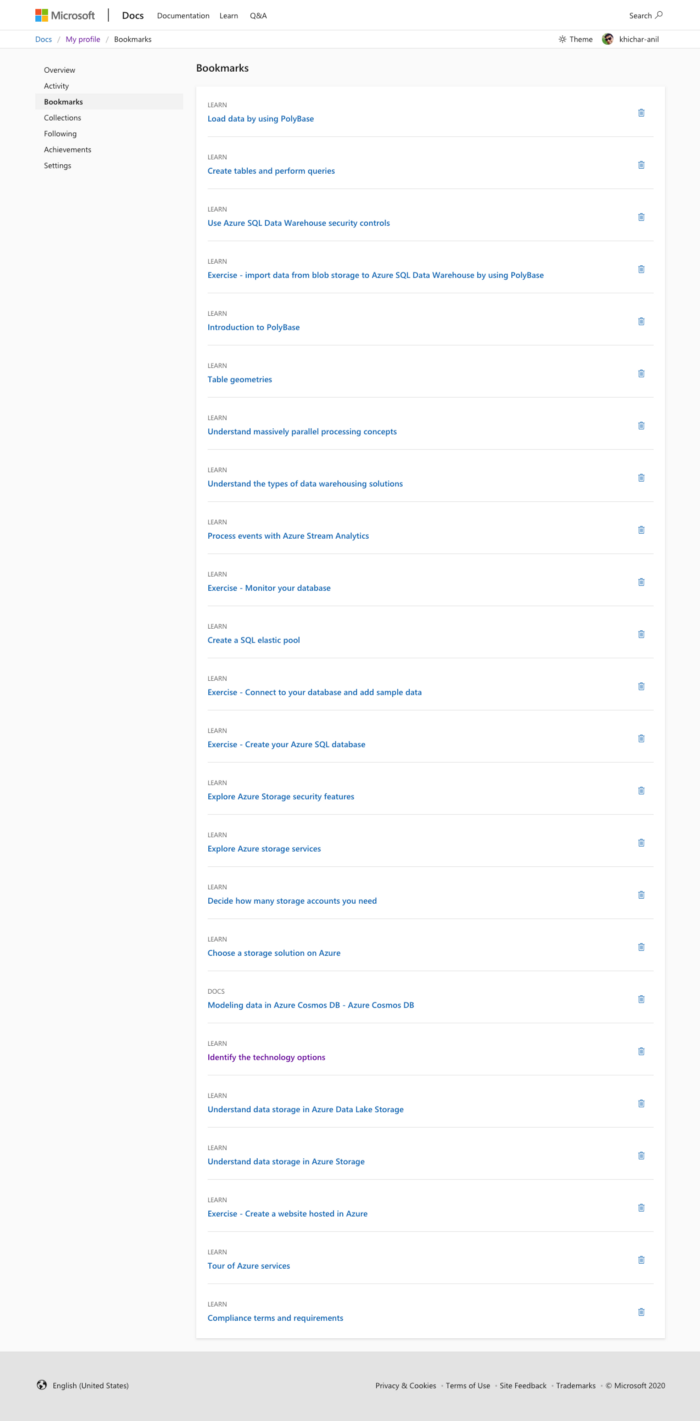

4. Must-read content: This was my revision list which can make you the winner if you were about to lose the nearline race. If you are well prepared and wants to cover last day content, here it is:

4.a. Implement Data Storage Solutions:

CosmosDB

- Understand CosmosDB use cases.

- It’s really important to know the decision criteria for CosmosDB APIs.

- Deep dive into Cosmos DB partitioning and learn about query performance tuning like deciding the partitioning key, creating lookup collection, etc.

- Perceive the intended meaning of Cosmos DB request units(RUs) so that you can save the cost and estimate the amount of RU for a particular workload.

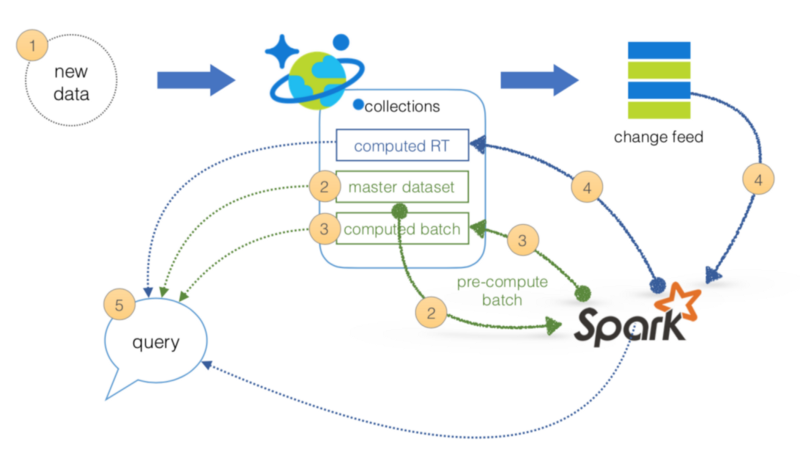

- Implement a lambda architecture on the Azure platform. Get a clear understanding of which service to use while following this approach like Apache Spark vs Apache Hadoop etc.

- Understand HDFS architecture and get a clear understanding and use cases about Primary, Secondary, NameNode & DataNode.

Storage

- Manage the Azure Blob storage lifecycle. Experiment the mentioned example in this post about applying a lifecycle policy. hands-on

- Learn about Access control in Azure Data Lake Storage Gen2. Pay special attention to Azure AD setup while applying ACLs. hands-on

- Configure Azure Storage firewalls and virtual networks. hands-on

Azure SQL Server & SQL Server Data Warehouse (Synapse Analytics)

- Which SQL option should I choose?

- Export an Azure SQL database to a BACPAC file. hands-on

- Learn about how to secure sensitive data in a SQL database with database encryption by using the Always Encrypted wizard. hands-on

- Experience Azure SQL Database Advanced Threat Protection features & steps to enable it. hands-on

- Experiment and enable TDE (Transparent Data Encryption) and keep a note on the steps: Formula(memory trick): MCED — Master Key, Certificate, Encryption & Apply encryption on the DB. hands-on

- Do an experiment using Powershell & Azure cloud shell. hands-on

- IP firewall rules. hands-on

- Read about dynamic data masking for Azure SQL Database and Azure Synapse Analytics. Give special attention to the in-built masking functions & their appropriate usages (Default, Credit Card, Email, Random Number, Custom Text)

- Polybase: Please execute this hands-on experiment multiple times to load the data from ADLS into WH and memorize all the steps in the correct sequence. Formula(Memory trick): MCSFTL — Master, Credential, Source, File, Table, Load(CTAS). Load New York Taxicab dataset hands-on

- DW performance benchmarking: This example demonstrates DW performance benchmarking and concluded to utilize a methodology of CTAS and partition switching in lieu of UPDATE and DELETE operations wherever possible. Get a full understanding of this fundamental approach. hands-on

4.b. Manage and develop data processing:

Azure Data Factory

- Understand the difference between all the available Integration runtime. Pay special attention to the self-hosted integration runtime.

- Azure Data Factory Copy Activity: Find out schema mapping ways between source & sink. hands-on

Storage & HDInsight

- Get in-depth knowledge of using Azure Data Lake Storage Gen2 for big data requirements. Also, learn about different Hadoop tools discussed in this article.

- Choose the correct HDInsight Configuration to build open-source analytics solutions. Give special attention to the use cases and get a better understanding of when to use Storm vs Spark etc.

Azure Databricks

- Learn about the technology choices for batch processing and what is the decision criteria to choose one over the others.

- ETL using Azure Databricks. Special attention to “Load data into Azure SQL Data Warehouse” hands-on

- Experiment on different cluster configurations. hands-on

Azure Stream Analytics

- Window functions: You must know the practical difference between all the stream analytics windowing functions & their usage (Tumbling, Hopping, Sliding & Session windows). hands-on

- Learn how to use lookup data in the Azure Stream Analytics in a data streaming pipeline. hands-on

- Azure Stream Analytics on IoT Edge

4.c. Monitor and optimize data solutions:

- Understand the SQL auditing features & do a hand-on experiment on who/when & what got accessed from the Azure SQL DB & WH? hands-on

- Learn about enabling SQL server automatic tuning & give special attention towards the inheritance with tuning options like Force Plan, Create Index & Drop Index. hands-on

- Read & understand In-Memory technologies that improve performance without increasing the database service tier. hands-on

- Understand the materialized view design pattern and think about its uses to boost a slow-performing SQL query. hands-on

- Learn how to enable and configure logging of diagnostics telemetry for Azure SQL databases. Pay special attention to the metric storage options like Azure SQL Analytics, Azure Event Hubs & Azure Storage. hands-on

- Imbibe ADLS Gen2 performance optimization techniques. Understand file sizing & data organization into folders.

- Discover Azure data factory monitoring using Azure monitor and think about use cases like last quarter log analytics and find out different actionable trends. hands-on

- Learn about on-premises HA data gateway cluster to avoid single points of failure and to load balance traffic across gateways in a cluster.

- Understand the use of Azure Log Analytics to monitor HDInsight clusters. Pay special attention to “Install HDInsight cluster management solutions”. hands-on

…

Core mantra has to be: Never target for certification, rather understand the platform & technology, because this will help us to design better solutions for our customers and perform our work in a qualitative manner. These are the perks of being a lifelong learner.

In this post, I shared all the resources that I found and used. Always check the latest official information from Microsoft before your exam.

Prepare well and all the best for the exam!